Excellent software and practical tutorials

Learn more about the differences between the various versions of http

What is the http protocol? Why is it divided into http1.x, http2.0,http3.0What are the differences between these three? The following is a detailed introduction to the differences between each version of the http protocol, so that you can further understand the http protocol.

What is HTTP?

Hypertext Transfer ProtocolHyperText Transfer Protocol (HTTP) is the most widely used network protocol on the Internet. The original purpose of designing HTTP was to provide a method for publishing and receiving HTML pages. Resources requested through HTTP or HTTPS protocols are identified by Uniform Resource Identifiers (URIs).

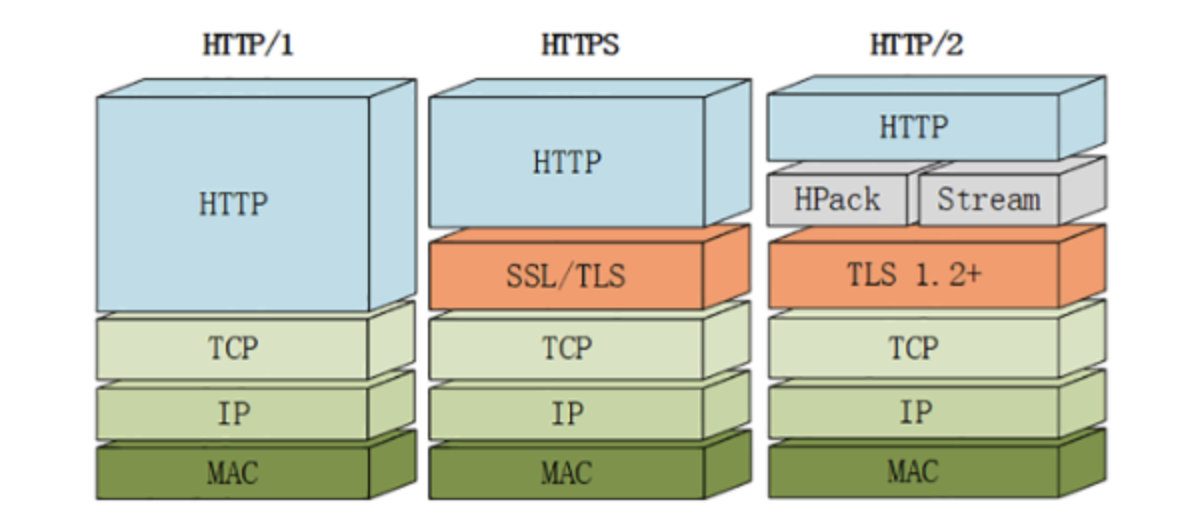

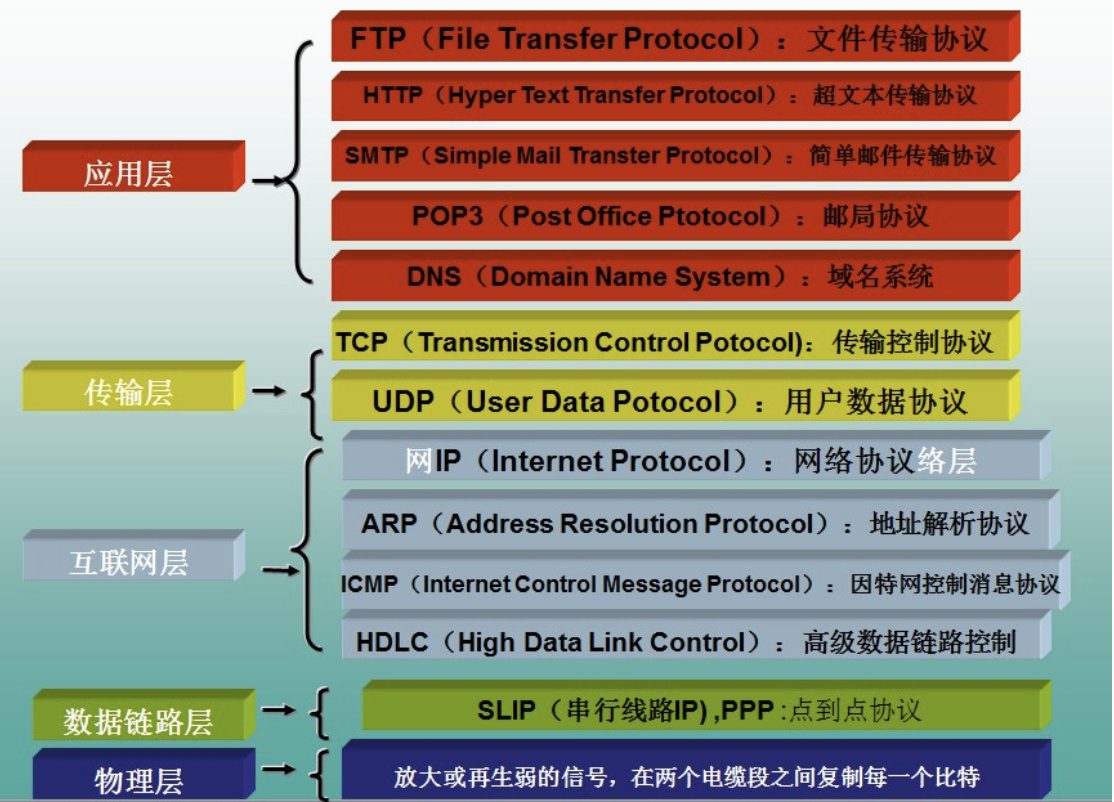

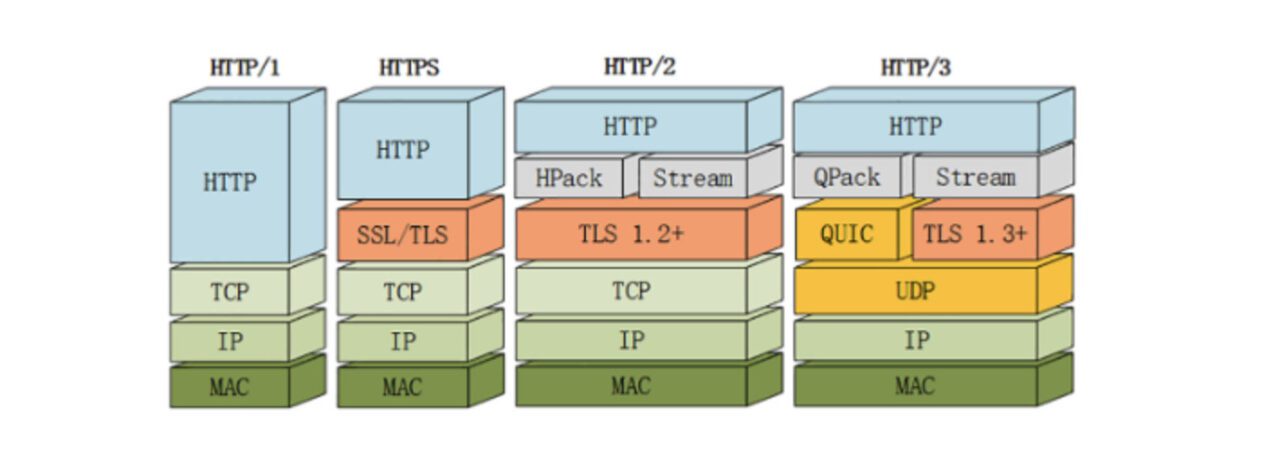

HTTP is an application layer protocol, consisting of requests and responses, and is a standard client-server model. HTTP is a connectionless, stateless protocol based on TCP/IP (each HTTP message does not depend on the state of the previous message). HTTP assumes that its lower layer protocol provides reliable transmission. Therefore, it uses TCP as its transport layer in the TCP/IP protocol suite.

The main features of the HTTP protocol can be summarized as follows:

- Simple: When a client requests a service from a server, it only needs to send the request method and path. Common request methods are GET, HEAD, and POST. Each method specifies a different type of communication between the client and the server.

- Flexible: HTTP allows the transmission of any type of data object. The type being transmitted is marked by Content-Type.

- Request-response mode: Each time the client makes a request to the server, a connection is established, and the server disconnects after processing the client's request.

- Stateless: HTTP is a stateless protocol. Stateless means that the protocol has no memory for transaction processing. The lack of state means that if the subsequent processing requires previous information, it must be retransmitted.

HTTP working process

HTTP consists of requests and responses and is a standard client-server model (B/S). The HTTP protocol always starts with the client initiating the request and the server sending back the response.

1. The client (browser) actively sends a connection request to the server (web server) (this step may require the DNS resolution protocol to obtain the server's IP address).

2. The server accepts the connection request and establishes a connection. (Steps 1 and 2 are the TCP three-way handshake we are familiar with)

3. The client sends HTTP commands such as GET to the server through this connection ("HTTP request message").

4. The server receives the command and transmits the corresponding data to the client according to the command ("HTTP response message").

5. The client receives the data sent from the server.

6. After sending the data, the server actively closes the connection ("TCP four-way breakup").

In summary, the client/server transmission process can be divided into four basic steps:

1) The browser establishes a connection with the server; (TCP three-way handshake)

2) The browser sends an HTTP request message to the server;

3) The server responds to the browser request;

4) Disconnect. ("TCP four-way breakup")

HTTP1.X Principle

In the working process of http1.0, http transmission of data requires three handshakes and four waves, which increases the delay

Keep-alive was added to http1.1 to keep the TCP connection connected and reduce some delays

Problems with HTTP 1.x

- Connections cannot be reused Connections cannot be reused, which causes each request to go through a three-way handshake and slow start. The three-way handshake has a more obvious impact in high-latency scenarios, while slow start has a greater impact on a large number of small file requests.

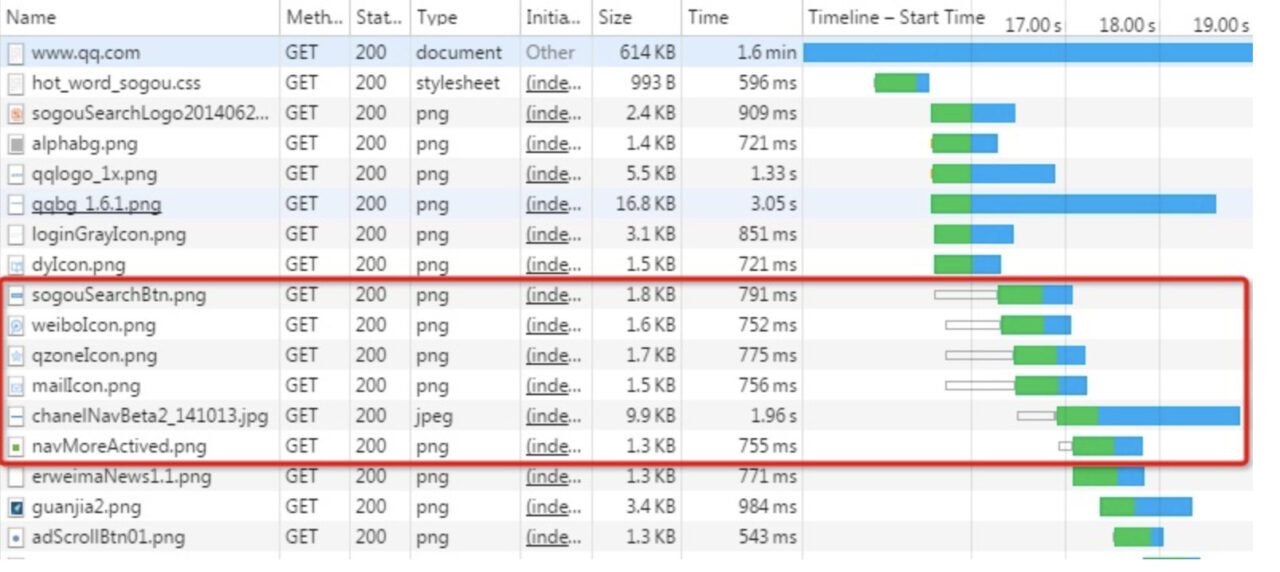

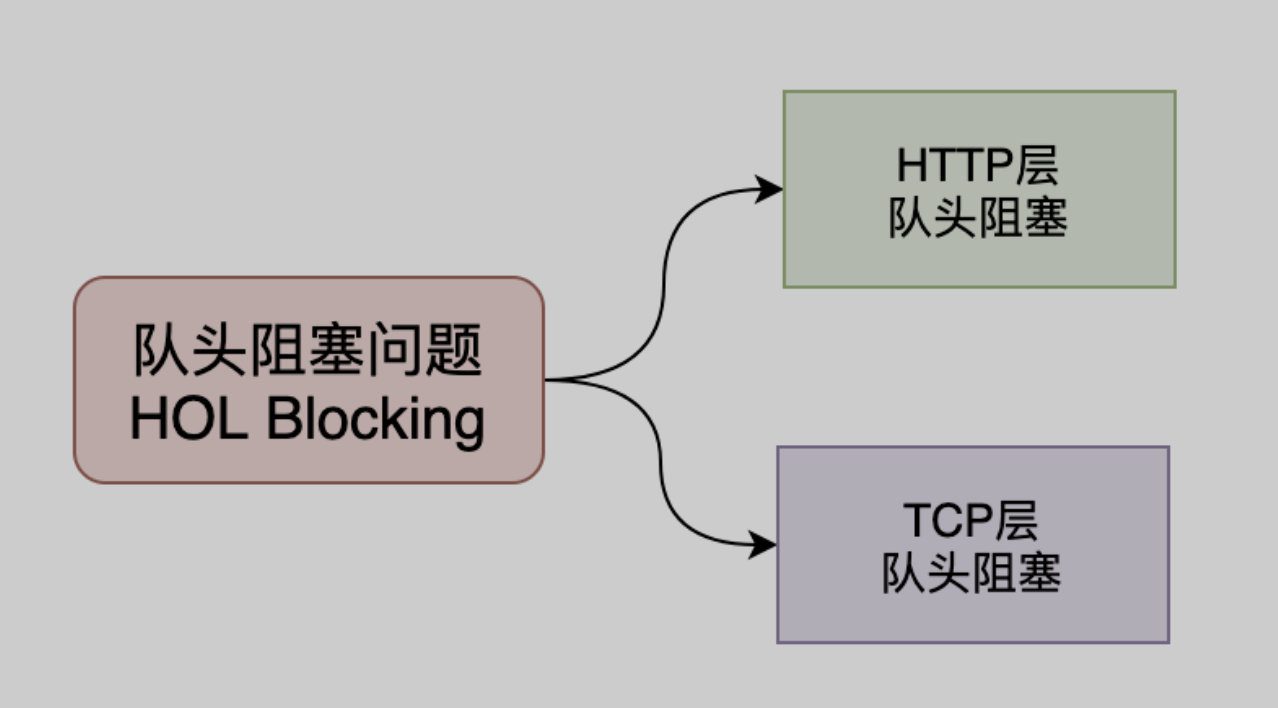

- Head-Of-Line Blocking (HOLB) causes bandwidth to not be fully utilized and subsequent health requests to be blocked.HOLBIt refers to a series of packages being blocked because the first package is blocked; when a page needs to request many resources, HOLB (head of line blocking) will cause the remaining resources to wait for other resource requests to complete before initiating requests when the maximum number of requests is reached. People have tried the following solutions to head of line blocking:

- HTTP 1.0: The next request must be sent after the previous request returns.

request-responseObviously, if a request does not return for a long time, then all subsequent requests will be blocked. - HTTP 1.1: Try to use pipelining to solve the problem, that is, the browser can send multiple requests at a time (same domain name, same TCP link). However, pipelining requires that the responses are returned in order. If the previous request is time-consuming (such as processing a large image), then even if the server has already processed the subsequent request, it will still wait for the previous request to be processed before starting to return in order. Therefore, pipelining only partially solves HOLB.

- Import sprites, inline small images, etc.

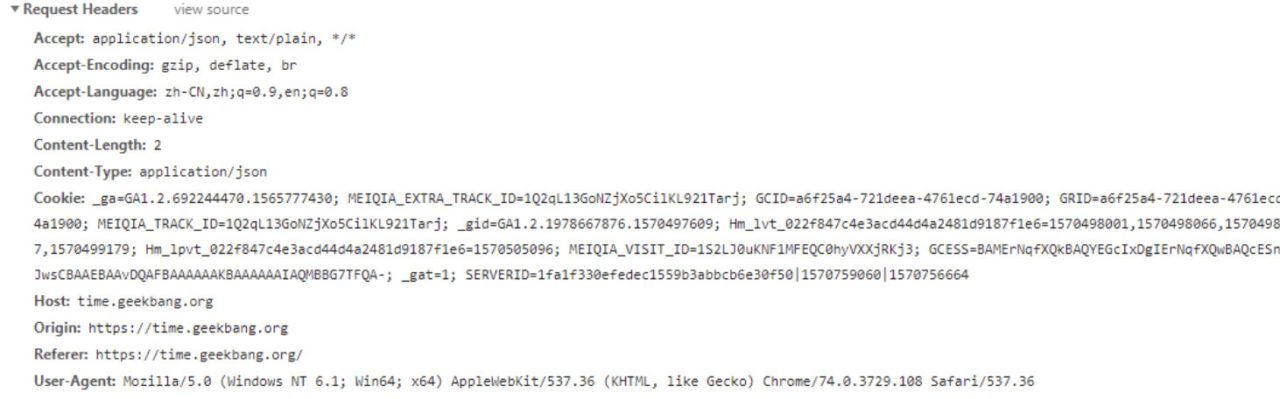

- The request header has a large overhead. The message header generally carries many fixed header fields such as "User Agent", "Cookie", "Accept", and "Server" (as shown below), up to hundreds or even thousands of bytes, but the body is often only tens of bytes. The large content of the header increases the transmission cost to a certain extent.

- Security factors When HTTP 1.x transmits data, all transmitted content is in plain text. Neither the client nor the server can verify the identity of the other party, which to a certain extent cannot guarantee the security of the data.

- Server push messages are not supported because HTTP/1.x does not have a push mechanism. So there are usually two ways to do it: one is polling, where the client polls regularly, and the other is websocket

2.0 How it works

In 2015, HTTP/2 was released. HTTP/2 is a replacement for the current HTTP protocol (HTTP/1.x), but it is not a rewrite. The HTTP methods/status codes/semantics are the same as HTTP/1.x.HTTP/2 is based on SPDY and focuses on performance. One of the biggest goals is to use only one connection between the user and the website..

Problems HTTP2 solves

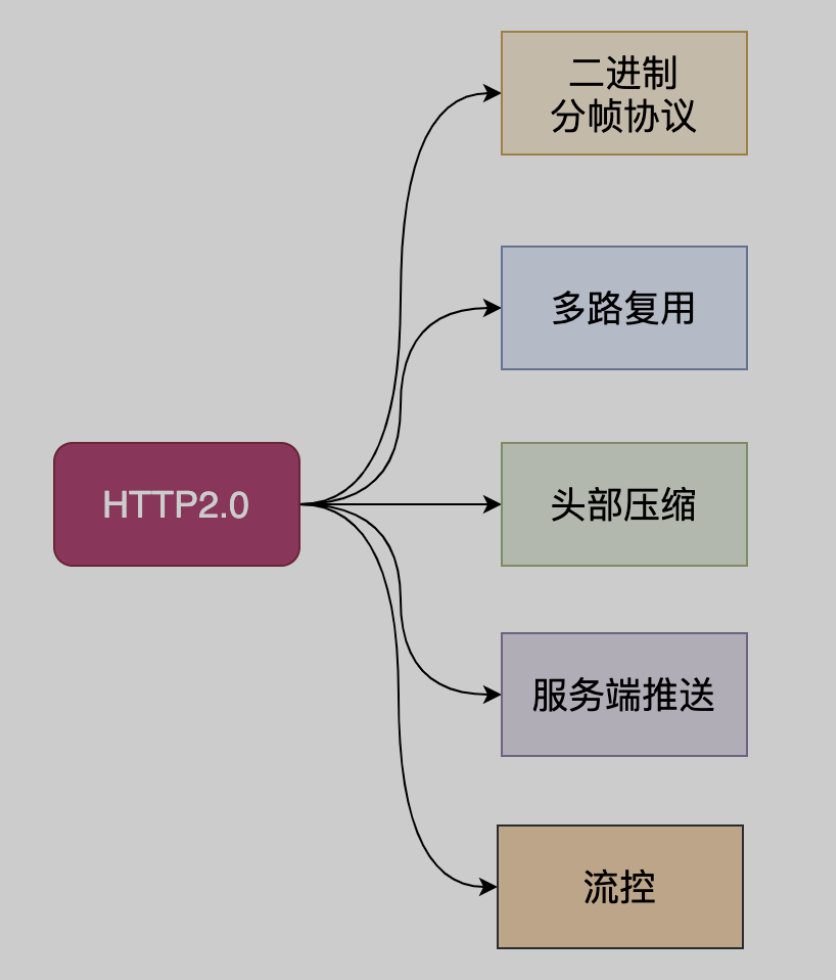

In response to the problems existing in http1.x, http2 has the following solutions:

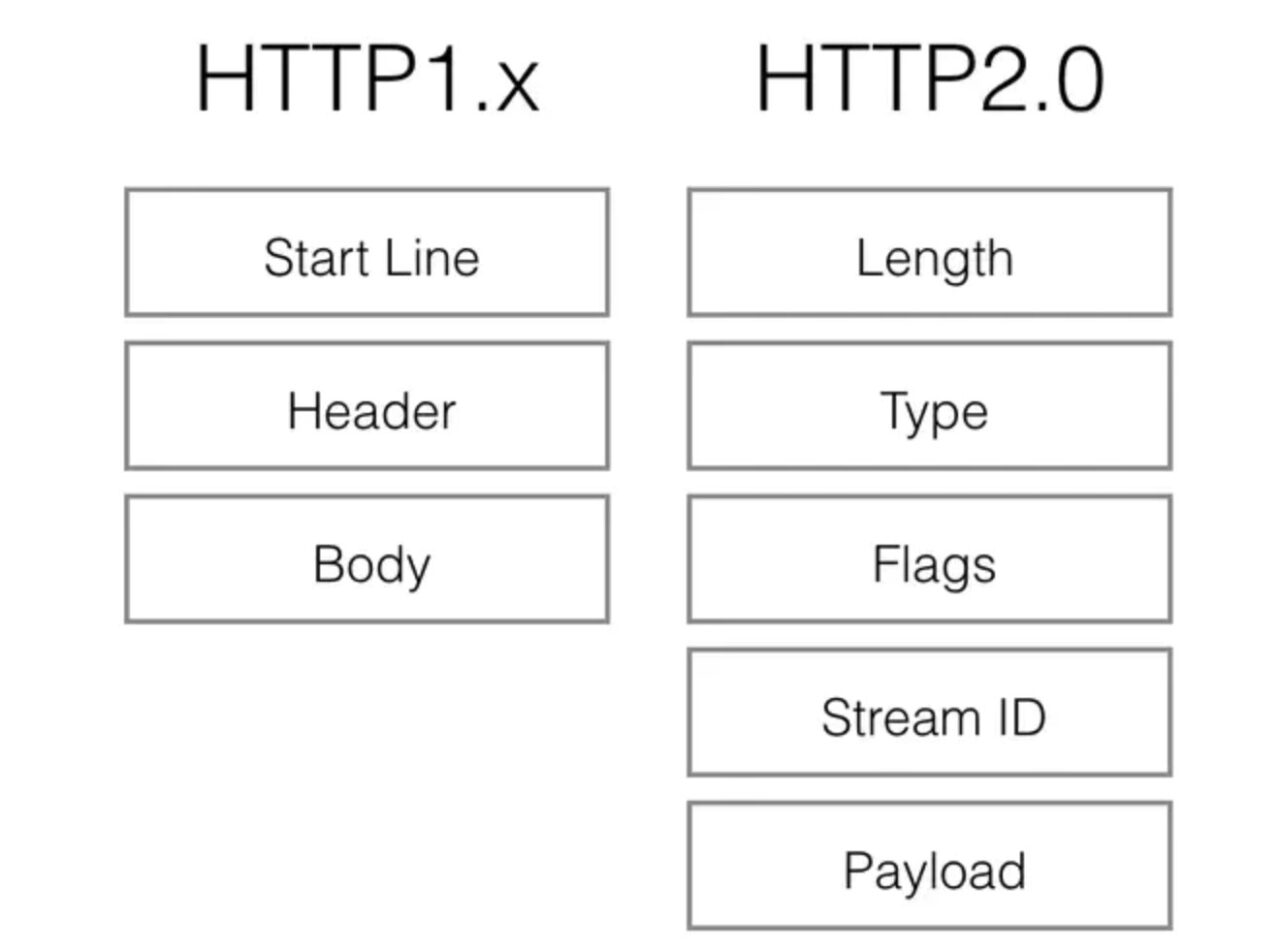

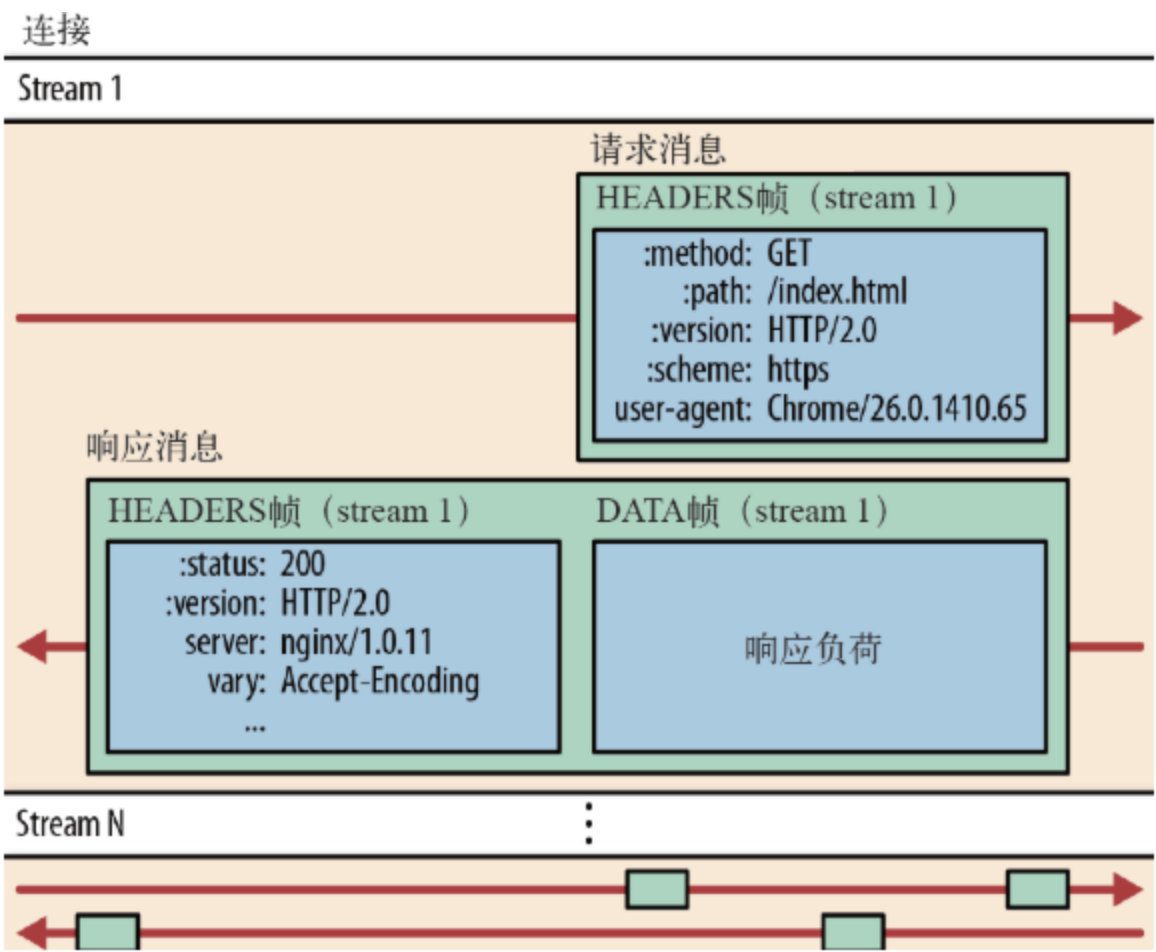

- Binary transmission HTTP/2 uses binary format to transmit data instead of the text format of HTTP 1.x. Binary protocol is more efficient to parse. HTTP/1 request and response messages are composed of a start line, a header, and an entity body (optional), and each part is separated by a text line break. HTTP/2 divides the request and response data into smaller frames, and they are encoded in binary.

Before understanding HTTP/2, you need to know some common terms:

- Stream: A bidirectional stream. A connection can have multiple streams.

- Message: It is the logical request and response.

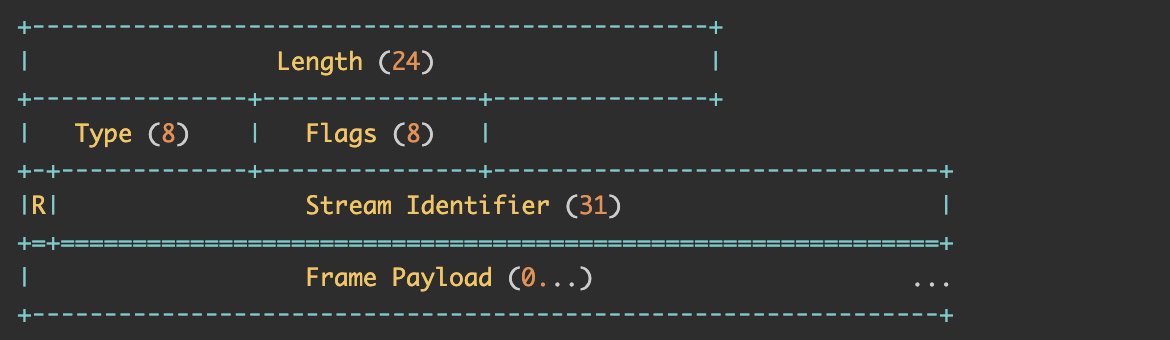

- Frame: The smallest unit of data transmission. Each frame belongs to a specific stream or the entire connection. A message may consist of multiple frames. Frame is the smallest unit of data transmission in HTTP/2. A frame is defined as follows:

Length: The length of the Frame. The default maximum length is 16KB. If you want to send a larger Frame, you need to explicitly set the max frame size.

Type: Frame type, such as DATA, HEADERS, PRIORITY, etc.

Flag and R: reserved bits.

Stream Identifier: Identifies the stream to which it belongs. If it is 0, it means that this frame belongs to the entire connection.

Frame Payload: has different formats according to different Types.

Stream has many important features:

- A connection can contain multiple streams, and the data sent by multiple streams do not affect each other.

- Stream can be used unilaterally or shared by client and server.

- Stream can be closed by either segment.

- The stream determines the order in which frames are sent, and the other end processes them in the order in which they are received.

- A stream is identified by a unique ID. If the stream is created by the client, the ID is an odd number, and if it is created by the server, the ID is an even number.

Binary transmission moves some features of the TCP protocol to the application layer, breaking up the original "Header+Body" message into several small binary "frames", using "HEADERS" frames to store header data and "DATA" frames to store entity data. After HTP/2 data is framed, the "Header+Body" message structure completely disappears, and the protocol only sees "fragments".

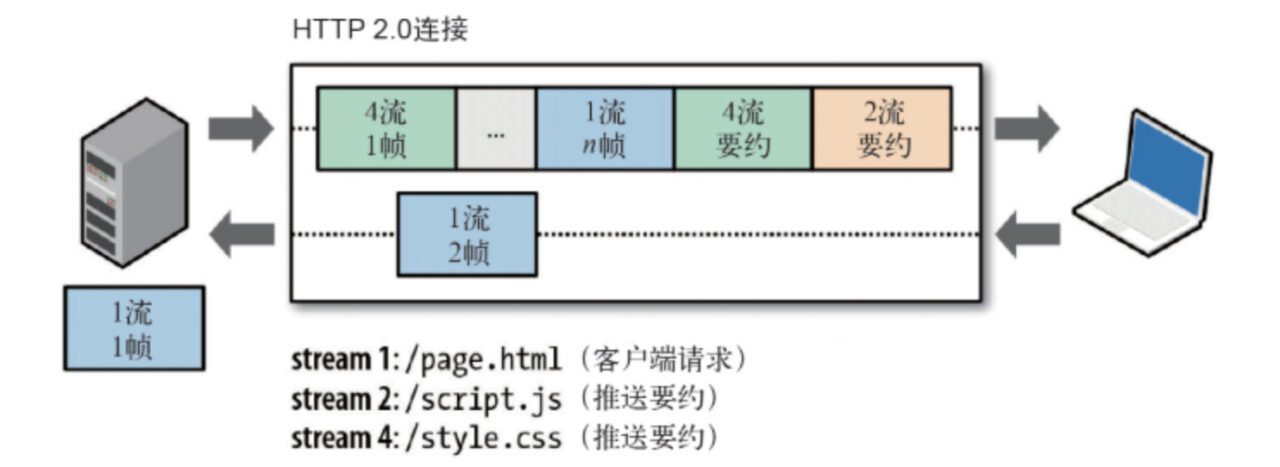

In HTTP/2, all communications under the same domain name are completed on a single connection, which can carry any number of bidirectional data streams. Each data stream is sent in the form of a message, which in turn consists of one or more frames. Multiple frames can be sent out of order and can be reassembled based on the stream identifier in the frame header.

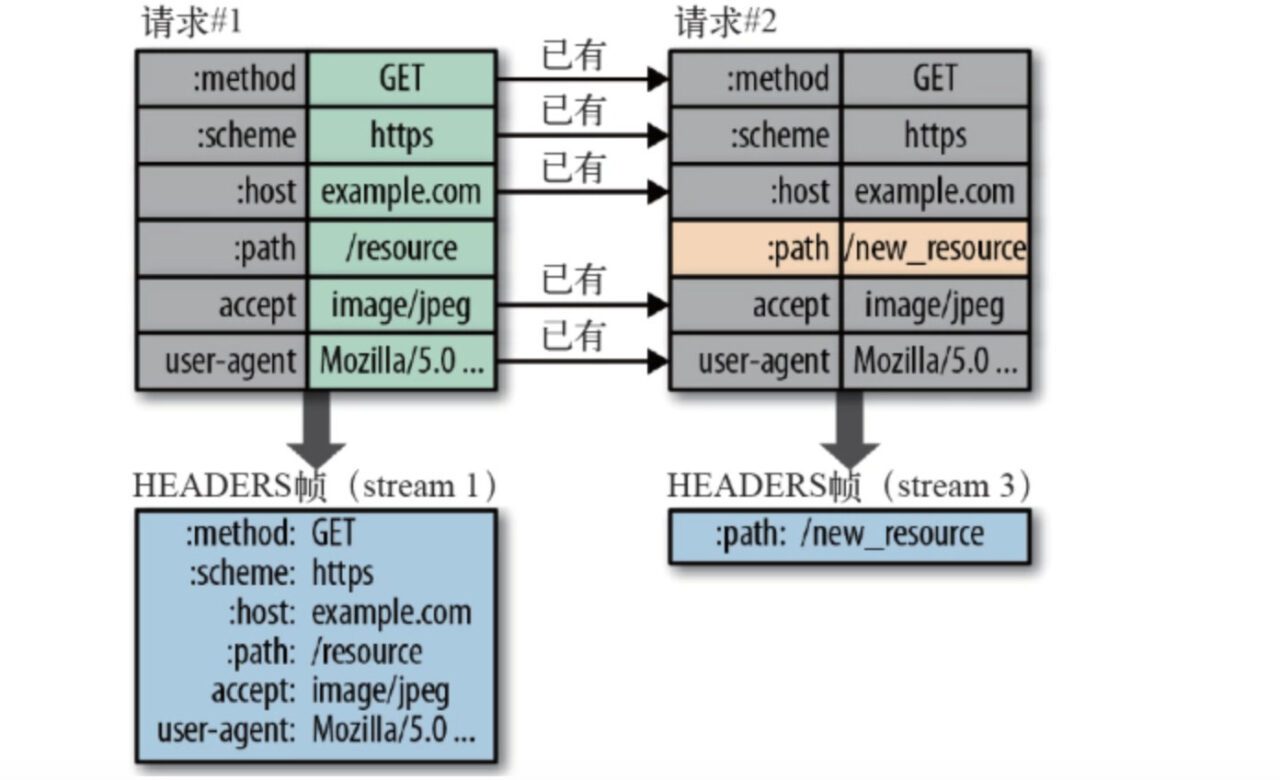

Header Compression

In HTTP/1, since headers are transmitted in text form, when the header carries cookies and user agents, hundreds to thousands of bytes may need to be transmitted repeatedly each time. HTTP/2 does not use traditional compression algorithms, but develops a special "HPACK" algorithm, establishes a "dictionary" on both the client and server, uses index numbers to represent repeated strings, and uses Huffman coding to compress integers and strings, which can achieve a high compression rate of 50%~90%.

The details are as follows:

- HTTP/2 uses a "header table" on the client and server to track and store previously sent key-value pairs. The same data is no longer sent in each request and response.

- The header table always exists during the HTTP/2 connection and is progressively updated by the client and server;

- Each new header key-value pair is either appended to the end of the current table or replaces the previous value in the table.

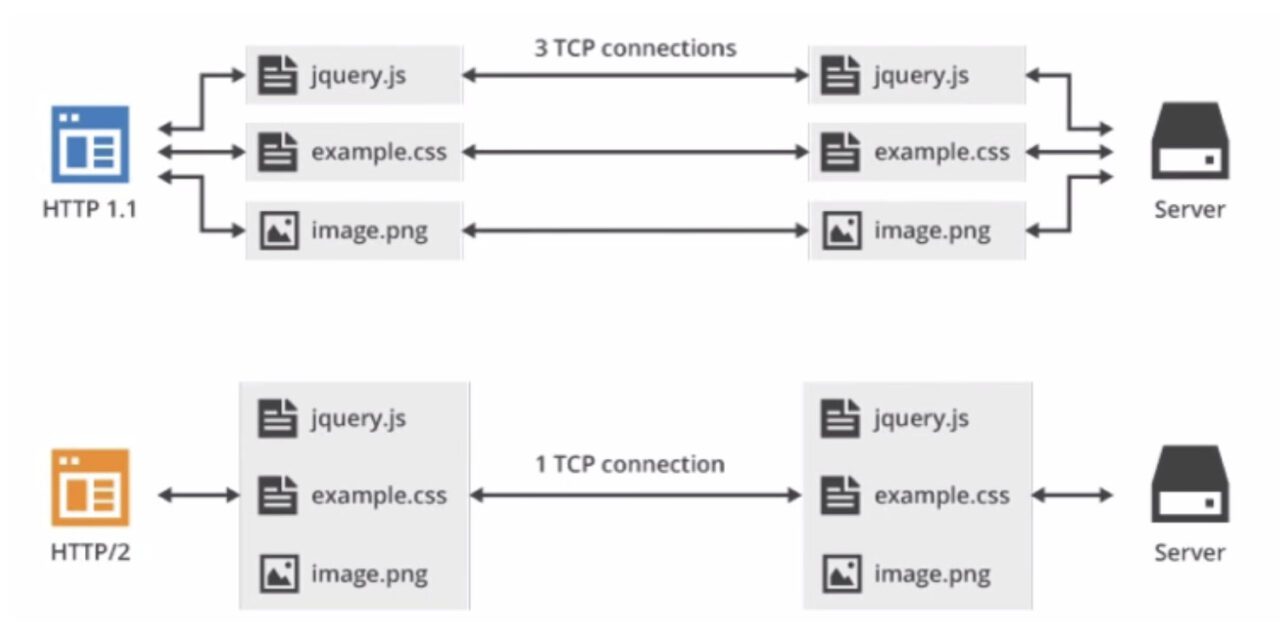

- Multiplexing (request and response multiplexing) The same domain name only needs to occupy one TCP connection. On one TCP connection, we can continuously send frames to the other party. The stream identifier of each frame indicates which stream the frame belongs to. Then when the other party receives it, all frames of each stream are spliced together according to the stream identifier to form a complete block of data.

The stream id mentioned in the protocol analysis above is used as a connection sharing mechanism. One request corresponds to one stream and is assigned an id, so that there can be multiple streams on a connection, and the frames of each stream can be randomly mixed together. The receiver can attribute the frames to different requests based on the stream id. Requests and responses do not affect each other.

Multiplexing (MultiPlexing), this function is equivalent to the enhancement of long connection. Each request can be randomly mixed together, and the receiver can attribute the request to different server requests according to the request ID.

In multiplexing, stream priorities (Stream dependencies) are supported, allowing the client to tell the server which content is a higher priority resource and can be transmitted first.

- Server Push Another powerful new feature added by HTTP/2 is that the server can send multiple responses to a client request. In other words, in addition to the response to the initial request, the server can push additional resources to the client without the client explicitly requesting it.

The server can actively push, and the client also has the right to choose whether to receive. If the resource pushed by the server has been cached by the browser, the browser can reject it by sending a RST_STREAM frame.

Active push complies with the same-origin policy. The server cannot push third-party resources to the client at will, but must be confirmed by both parties.

- Improved security HTTP/2 continues the "plain text" feature of HTTP/1 and can transmit data in plain text as before. It does not force the use of encrypted communication. However, the format is still binary, but it does not require decryption.

Since HTTPS is the general trend, and mainstream browsers such as Chrome and Firefox have publicly announced that they only support encrypted HTTP/2, "in fact" HTTP/2 is encrypted. In other words, the HTTP/2 commonly seen on the Internet uses the "https" protocol name and runs on TLS. The HTTP/2 protocol defines two string identifiers: "h2" for encrypted HTTP/2 and "h2c" for plaintext HTTP/2.

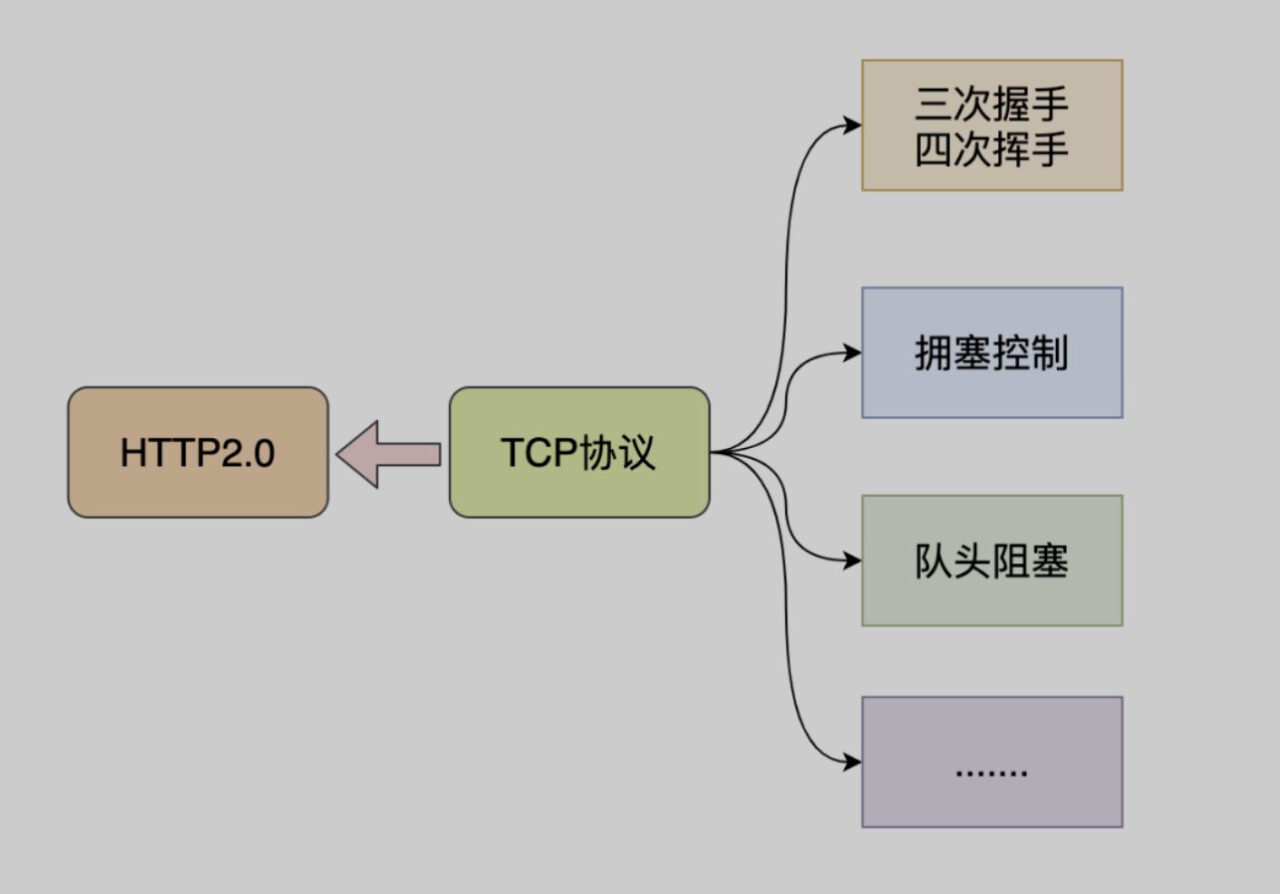

Problems with HTTP2

- Both http1.x and http2 are based on TCP. TCP is a secure and reliable transmission protocol, and the delay in establishing a connection is still a bit high. This is mainly due to the delay in establishing a connection between TCP and TCP+TLS.

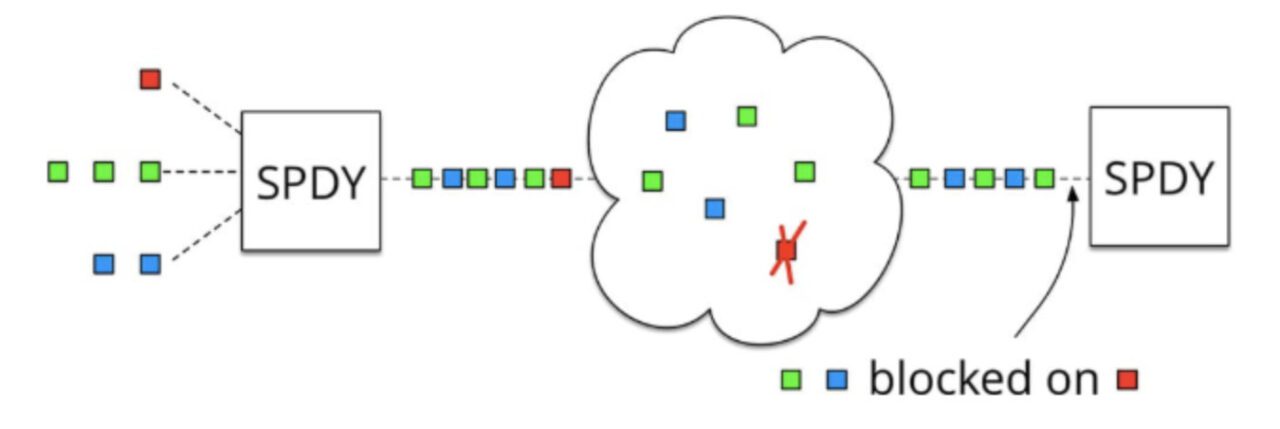

- It is impossible to solve the TCP head-of-line congestion problem. HTTP2 solves the HTTP layer head-of-line congestion through multiplexing, but the TCP failure retransmission problem is still unsolvable.

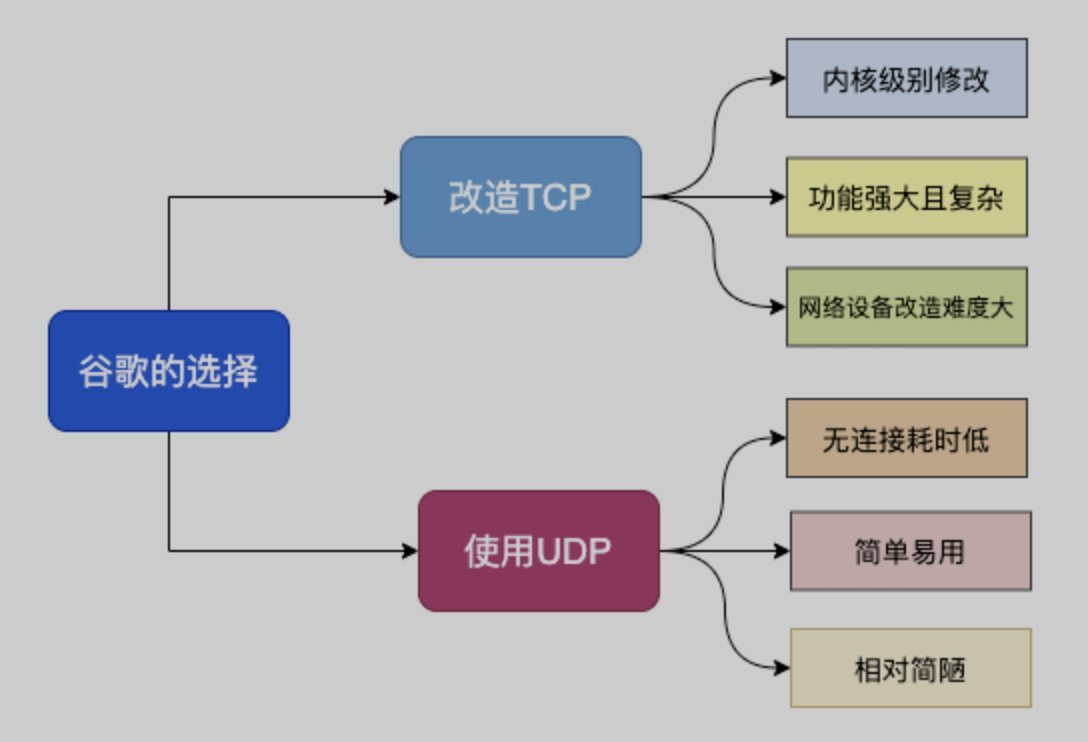

HTTP 3.0 Principles

Because TCP has existed for so long, it has been used in various devices, and this protocol is implemented by the operating system, so it is not practical to update it.

For this reason,Google has created a QUIC protocol based on UDP protocol and used it on HTTP/3.HTTP/3 was previously called HTTP-over-QUIC. From this name, we can also see that the biggest transformation of HTTP/3 is the use of QUIC. QUIC (Quick UDP Internet Connections) is based on UDP, which is based on the speed and efficiency of UDP. At the same time, QUIC also integrates the advantages of TCP, TLS and HTTP/2 and optimizes them.

Secondary goals of QUIC include reducing connection and transmission latency, performing bandwidth estimation in each direction to avoid congestion. It also moves the congestion control algorithm to user space instead of kernel space, and extends it with forward error correction (FEC) to further improve performance in the presence of errors.

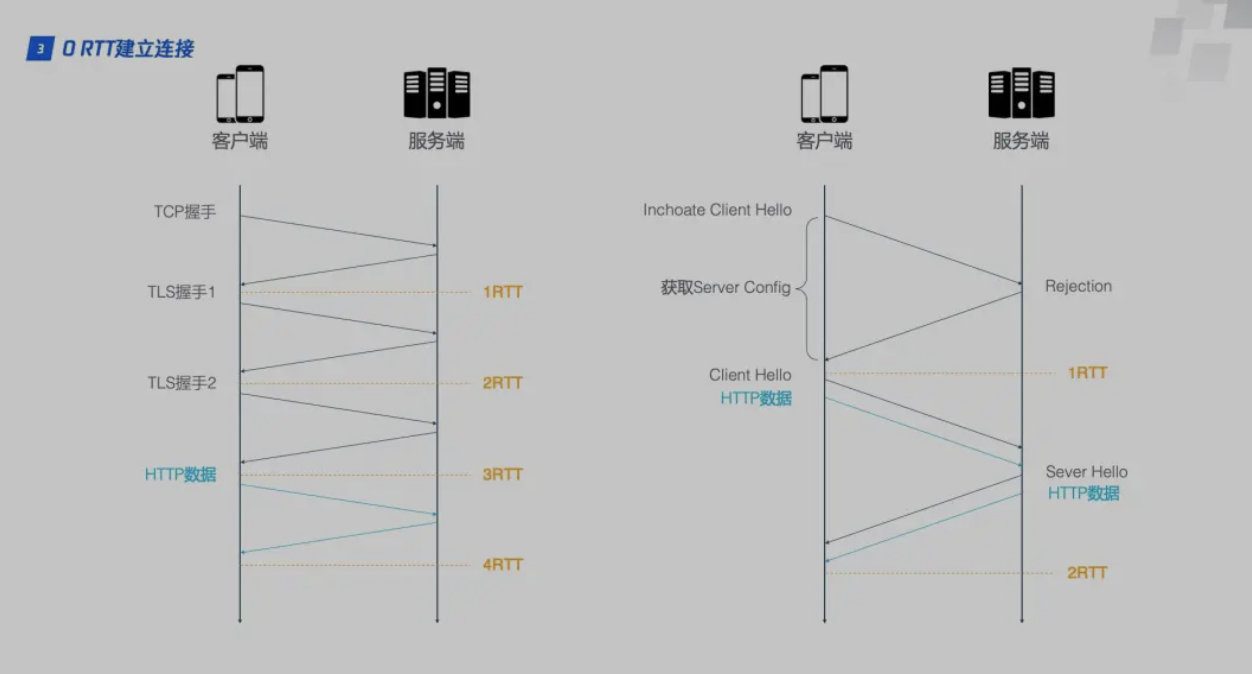

Functions implemented by HTTP3

- To implement the fast handshake function, HTTP/2 connection requires 3 RTTs. If session reuse is considered, that is, the symmetric key calculated in the first handshake is cached, then 2 RTTs are also required. Furthermore, if TLS is upgraded to 1.3, HTTP/2 connection requires 2 RTTs, and session reuse requires 1 RTT. However, HTTP/3 only requires 1 RTT for the first connection, and 0 RTT for subsequent connections.

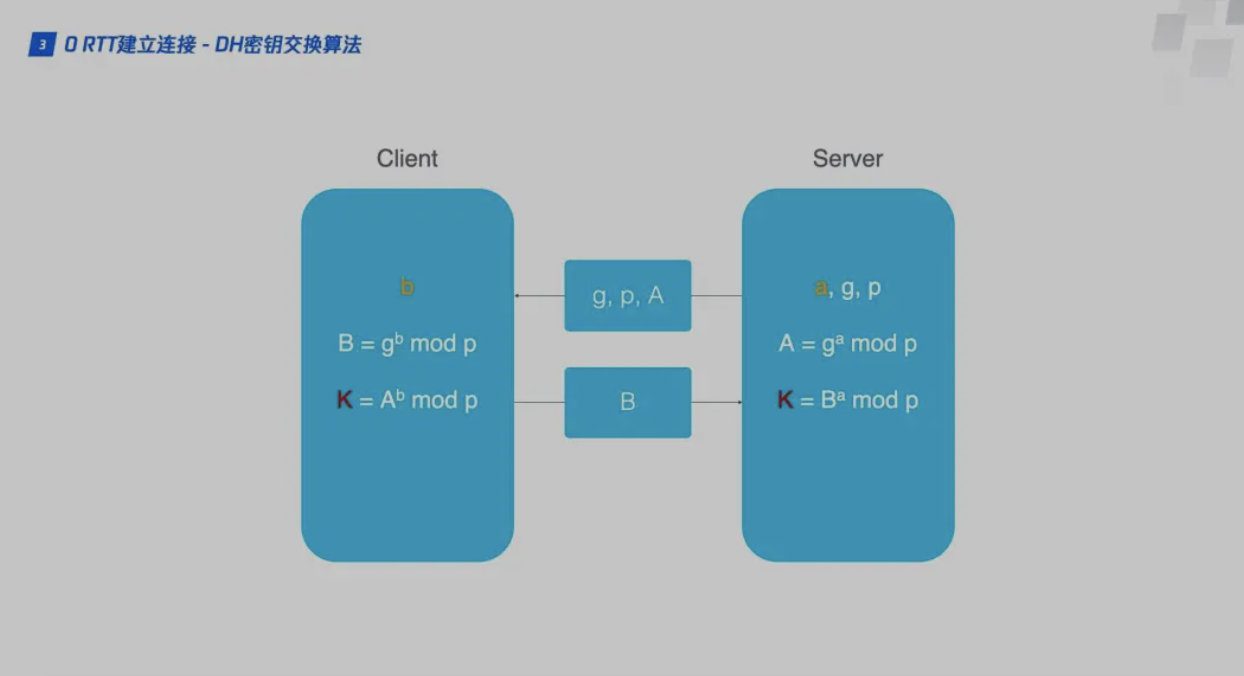

The client and server using the QUIC protocol should use 1RTTKey Exchange, the exchange algorithm used is DH (Diffie-Hellman)Diffie-Hellman algorithm.

When connecting for the first timeKey negotiation and data transmission process between client and server, which involves the basic process of the DH algorithm:

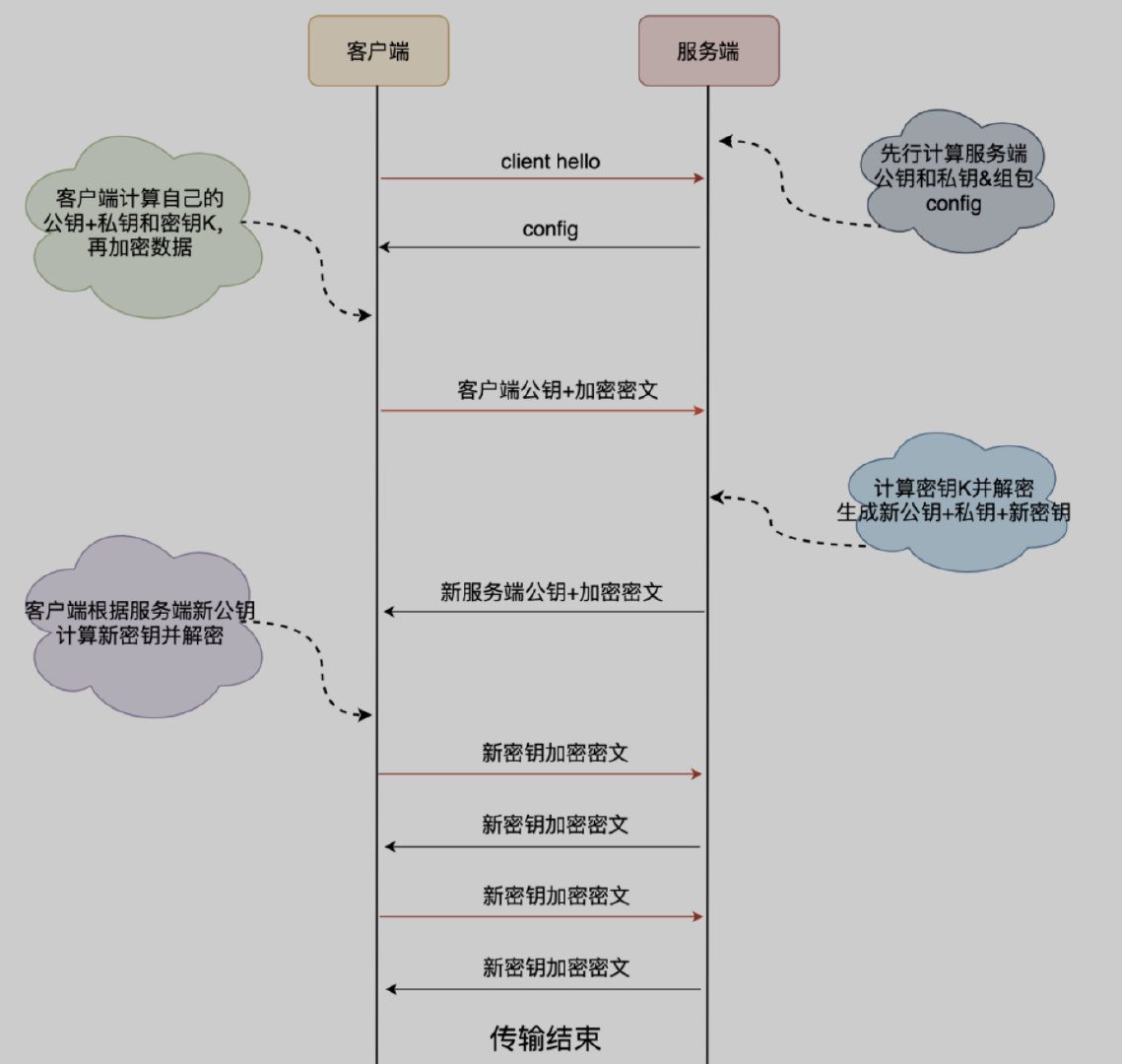

- The client first sends a client hello request to the server it connects to for the first time.

- The server generates a prime number p and an integer g, and also generates a random number as the private key a. It then calculates the public key A = mod p. The server packages the three elements A, p, and g into a config and sends it to the client.

- The client randomly generates its own private key b, then reads g and p from the config, and calculates the client public key B = mod p. The client then calculates the initial key K based on A, p, and b. After B and K are calculated, the client will use K to encrypt the HTTP data and send it to the server together with B;

- The client uses its own private key b and the server's public key read from the config sent by the server to generate the key K = mod p for subsequent data encryption.

- The client uses the key K to encrypt the business data and appends its own public key, which are then passed to the server.

- The server generates the client encryption key K = mod p based on its own private key a and the client public key B.

- To ensure data security, the key K generated above will only be used once. The server will subsequently generate a new set of public and private keys according to the same rules, and use this set of public and private keys to generate a new key M.

- The server sends the data encrypted with the new public key and the new key M to the client. The client calculates the key M based on the new server public key and its original private key and decrypts it.

- All subsequent data interactions between the client and the server are completed using key M, and key K is only used once.

When the client and server first connect, the server passesconfig package, which contains the server public key and two random numbers,The client will store the config, it can be used directly when reconnecting later, thus skipping the 1RTT and realizing 0RTT business data interaction.

The client saves the config for a time limit, and the key exchange at the first connection is still required after the config expires.

- Integrated TLS encryption function

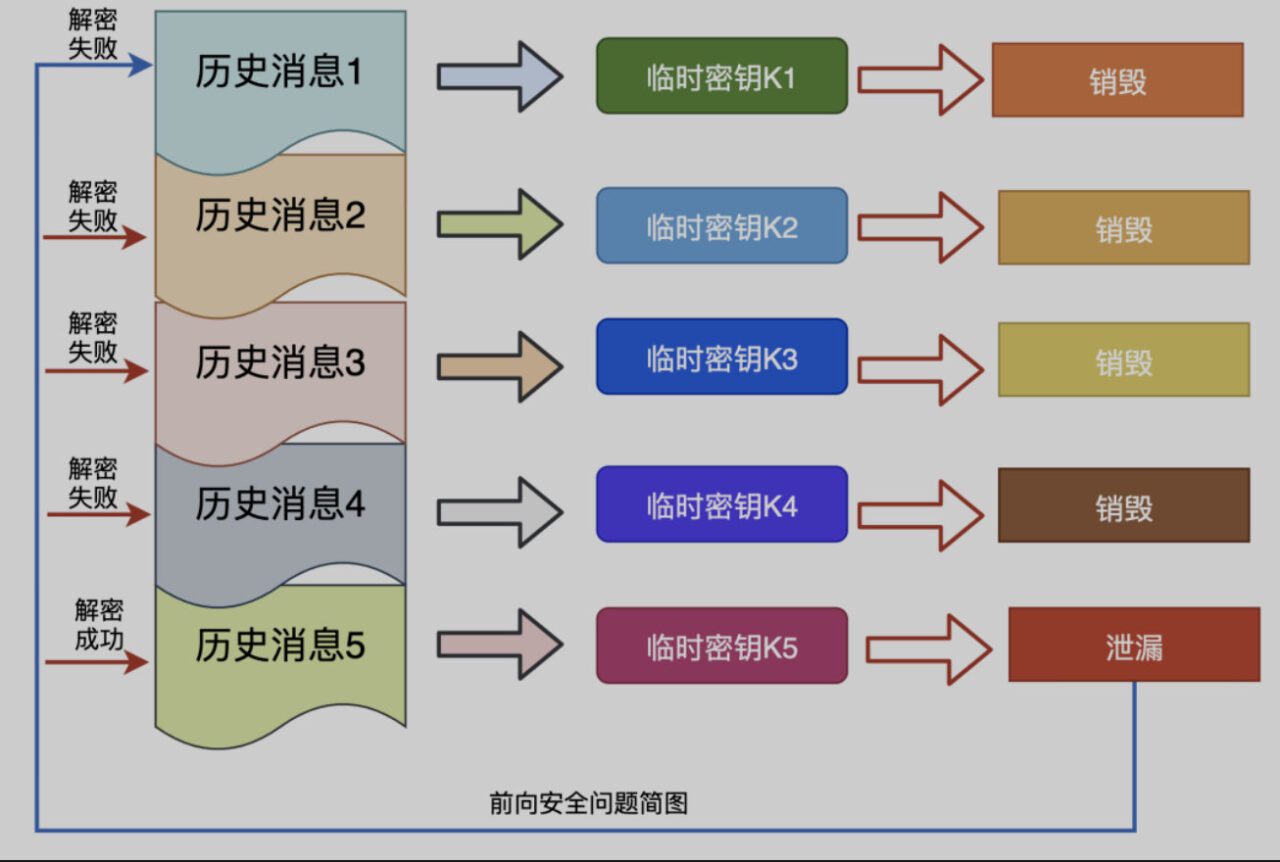

- Safe forwardForward security means that even if the key is leaked, the previously encrypted data will not be leaked. It only affects the current data and has no effect on the previous data..

QUIC uses the validity period of the config and subsequently generates a new key, which is used for encryption and decryption and destroyed when the interaction is completed, thus achieving forward security.

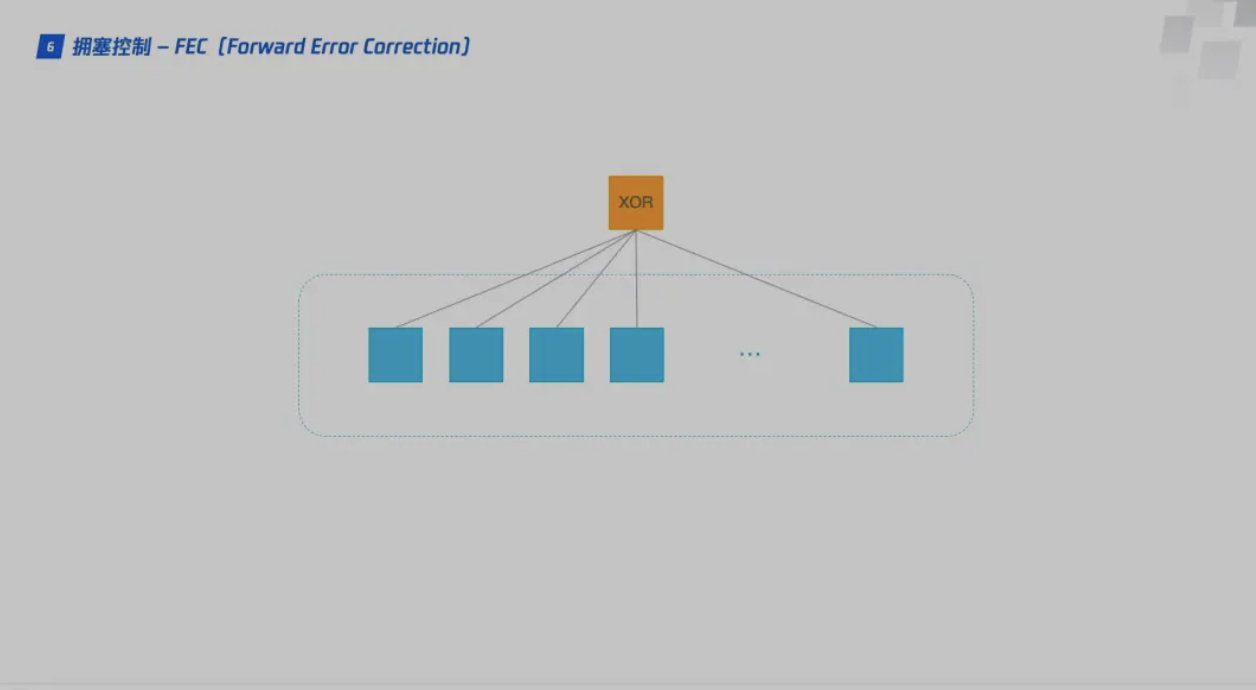

- Forward Error Correction Forward Error Correction, also known as Forward Error Correction code, abbreviated as FEC, is a method to increase the credibility of data communication. In a one-way communication channel, once an error is discovered, its receiver will no longer have the right to request transmission.

FEC is a method of transmitting redundant information with data, allowing the receiver to reconstruct the data when errors occur during transmission.

Each time QUIC sends a group of data, it processes the dataXOR operationThe result is sent as an FEC packet. After receiving this set of data, the receiver can perform verification and error correction based on the data packet and FEC packet. After a piece of data is divided into 10 packets, each packet is XORed in turn, and the result of the operation will be transmitted together with the data packet as an FEC packet. If a data packet is lost during the transmission process, the data of the lost packet can be inferred based on the remaining 9 packets and the FEC packet, which greatly increases the fault tolerance of the protocol.

- Safe forwardForward security means that even if the key is leaked, the previously encrypted data will not be leaked. It only affects the current data and has no effect on the previous data..

- Multiplexing, solving the head-of-line blocking problem in TCP HTTP2.0 protocolMultiplexing mechanismIt solves the head-of-line blocking problem at the HTTP layer, butThe TCP layer still has the problem of head-of-line blocking.

After the TCP protocol receives the data packet, this part of the data may arrive out of order, but TCP must collect, sort and integrate all the data for use by the upper layer.If one of the packets is lost, it must wait for retransmission, resulting in a packet loss that blocks the data usage of the entire connection..

The QUIC protocol is implemented based on the UDP protocol.Multiple Streams, between the flows isNo mutual influenceWhen a flow experiences packet loss, the impact is very small, thus solving the head-of-line blocking problem.

- Implement TCP-like flow control (… this part is long, see reference)