Excellent software and practical tutorials

What is the robots meta tag?

Make good use of the robots meta tagRobots, making your SEO more effective with less effort, a must for website optimization and Google indexing.Robots meta tagIt is a page-level setting that controls whether individual pages are indexed and displayed in search results. Robots meta tags are tags that tell search engines what to follow and what not to follow.

Where do I put the robots meta tag?

The robots meta tag is placed in the head section of the web page!

How to write the robots meta tag

This tag tells all search engines not to index this page, completely preventing it from appearing in search results.

Standardize the writing of data-nosnippet and X-Robots-Tag

How to use page-level and text-level settings to adjust how Google presents content in search results. You can specify page-level settings by adding meta tags to your HTML pages or HTTP headers. You can use data-nosnippet Properties to specify text-level settings.

Please note that these settings are only read and respected if our crawler has access to the page containing them.

Tags or directives apply to search engine crawlers. If you want to block non-search crawlers (such as AdsBot-Google), you may need to add directives for specific crawlers. For example:

Using the robots meta tag

The robots meta tag allows you to control how individual pages are indexed and displayed to users in Google Search results using granular, page-level settings. Place the robots meta tag in the head section of a given page.

In this example, the robots meta tag instructs search engines not to show the page in search results. The value of the name attribute (robots) specifies that this directive applies to all crawlers. To target a specific crawler, replace the robots value of the name attribute with the name of that crawler. A specific crawler is also called a user-agent (a crawler uses its user-agent to request a page). The user-agent name for Google's standard web crawler is Googlebot. If you only want to prevent Googlebot from indexing your pages, update the tag as follows:

This tag will now explicitly instruct Google not to show this page in Google search results. Both the name and content attributes are case insensitive.

Search engines may use different crawlers for different purposes. For more information, see Complete list of Google crawlersFor example, if you want a page to appear in Google's web search results but not in Google News, you could use the following meta tag:

To specify multiple crawlers individually, use multiple robots meta tags:

To prevent non-HTML resources (such as PDF files, video files, or image files) from being indexed, use X-Robots-Tag Response headers.

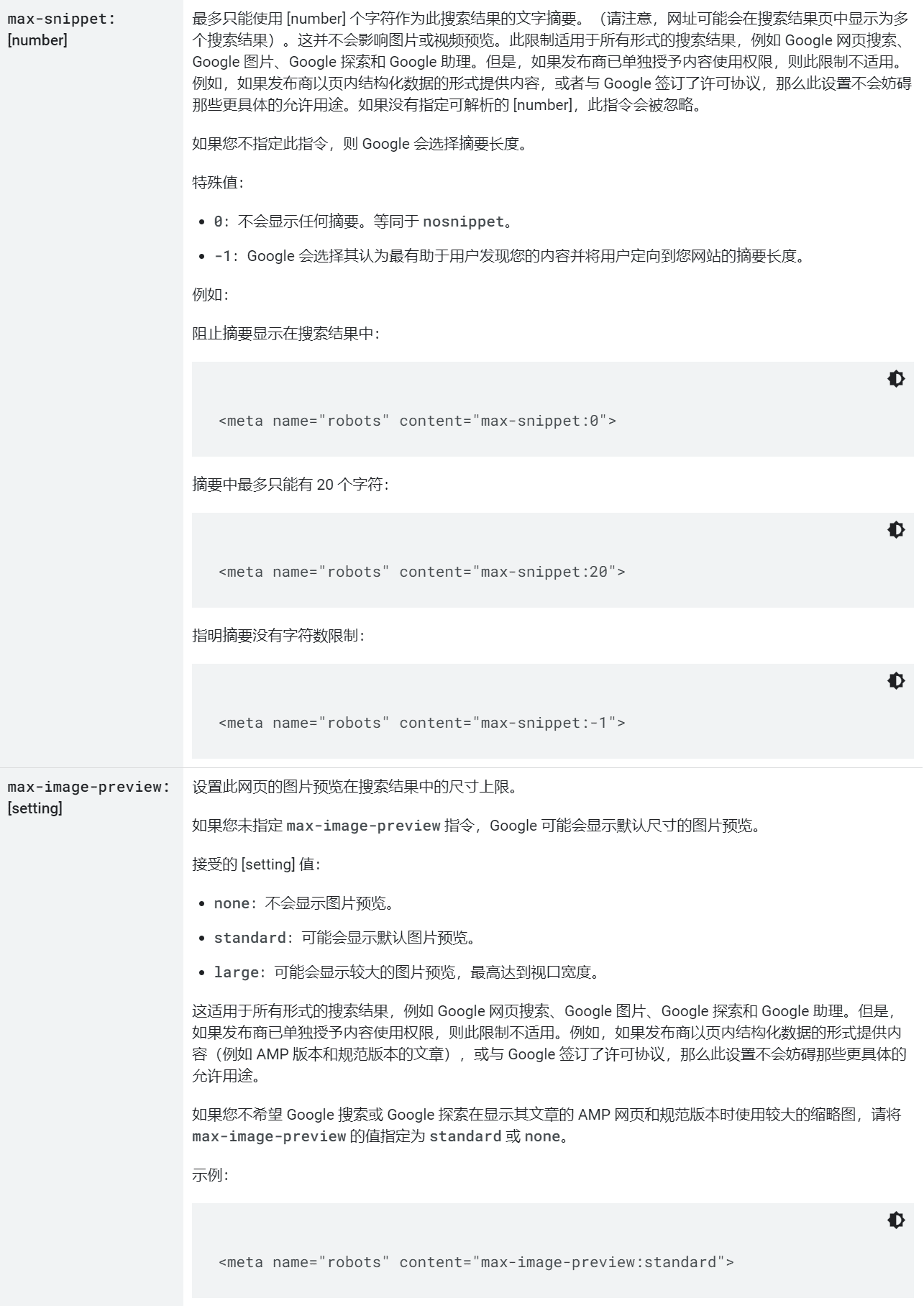

Using the X-Robots-Tag HTTP header

The X-Robots-Tag can be used as an element in the HTTP header response for a given URL. Any directive that can be used in a robots meta tag can be specified as an X-Robots-Tag. Here is an example of an HTTP response with an X-Robots-Tag that instructs crawlers not to index a page:

HTTP/1.1 200 OK Date: Tue, 25 May 2010 21:42:43 GMT (…) X-Robots-Tag: noindex (…)

You can combine multiple X-Robots-Tag headers in an HTTP response, or specify a comma-separated list of directives. The following example HTTP header response combines the noarchive X-Robots-Tag with the unavailable_after X-Robots-Tag.

HTTP/1.1 200 OK Date: Tue, 25 May 2010 21:42:43 GMT (…) X-Robots-Tag: noarchive X-Robots-Tag: unavailable_after: 25 Jun 2010 15:00:00 PST (…)

X-Robots-Tag can also specify a user-agent in front of the directive. For example, the following set of X-Robots-Tag HTTP headers can be used to conditionally allow a page to appear in search results of different search engines:

HTTP/1.1 200 OK Date: Tue, 25 May 2010 21:42:43 GMT (…) X-Robots-Tag: googlebot: nofollow X-Robots-Tag: otherbot: noindex, nofollow (…)

If no user-agent is specified in the directive, it applies to all crawlers. The HTTP header, user-agent name, and specified value are not case-sensitive.

Conflicting robots directives: If there are conflicting robots directives, the more restrictive directive will be honored. For example, if a page contains both max-snippet:50 and a nosnippet directive, the nosnippet directive will be honored.

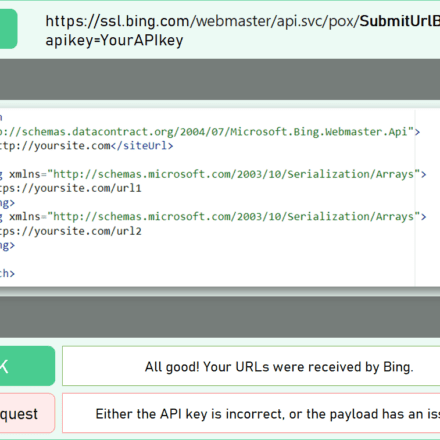

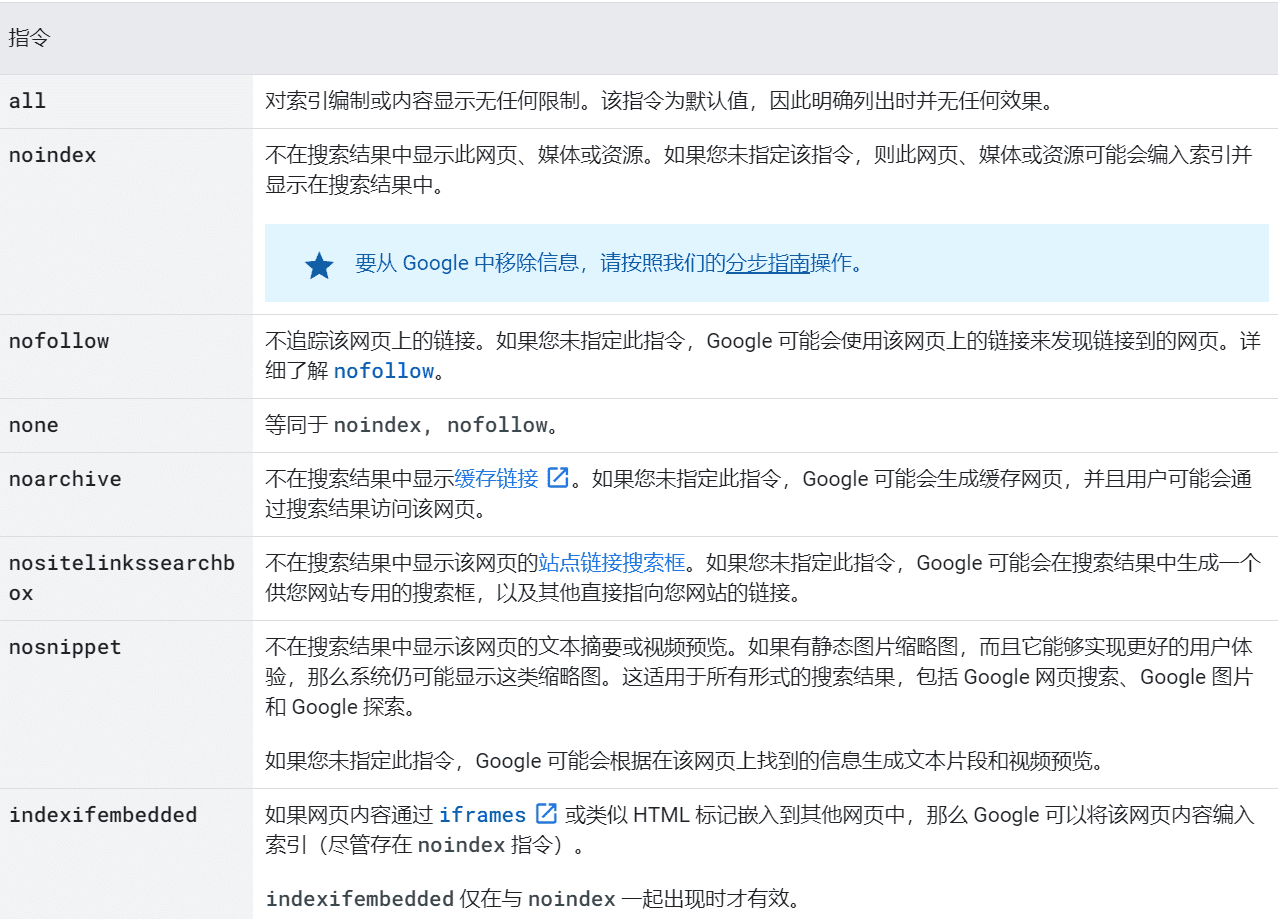

Valid indexing and content serving instructions

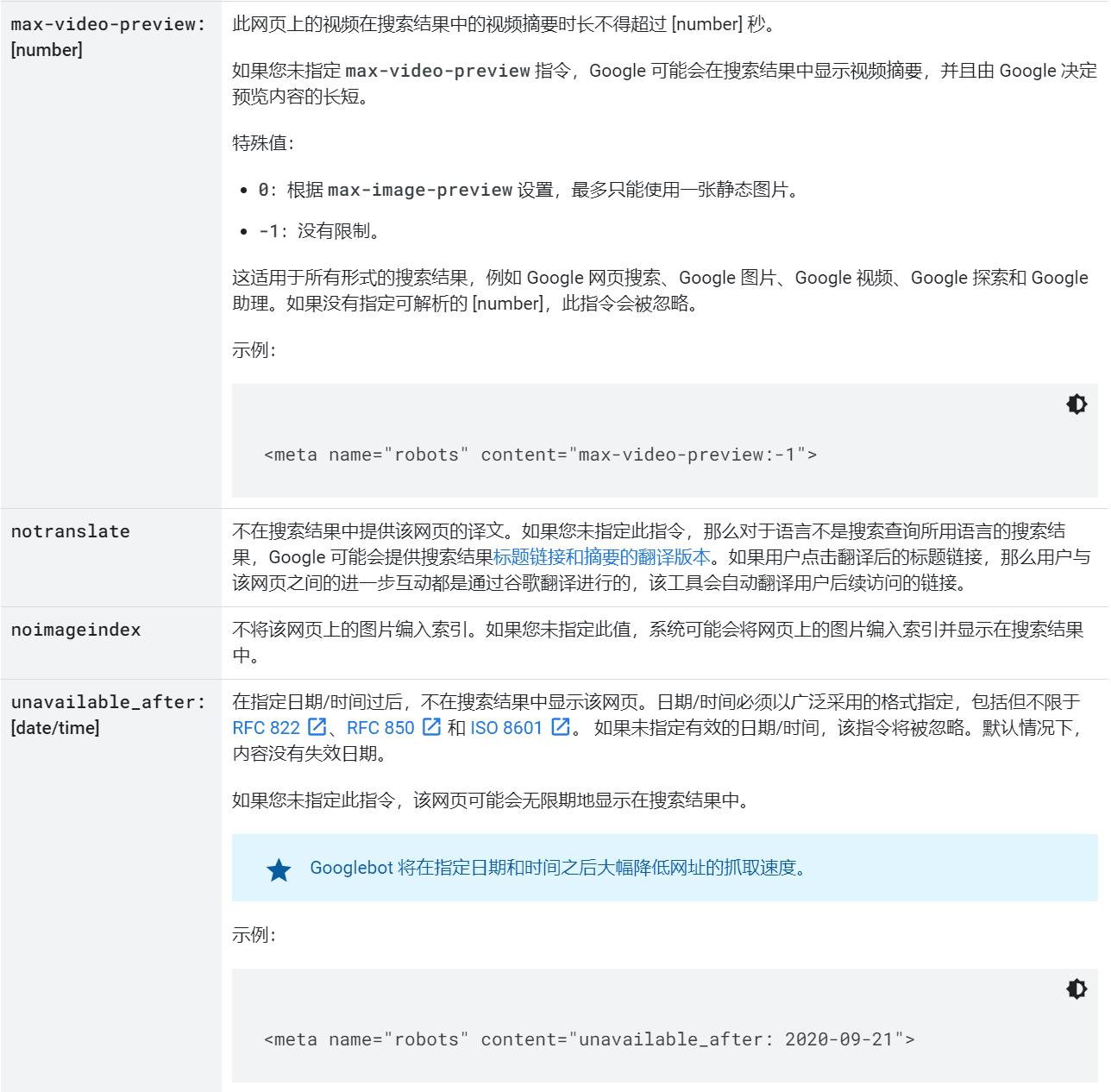

You can use the following directives with the robots meta tag and the X-Robots-Tag to control indexing and snippet display. In search results, a snippet is a short text excerpt that indicates the relevance of the document to the user's query. The following table lists all the directives that Google supports and their respective meanings. Each value represents a specific directive. You can combine multiple directivesCombine into a comma separated list or use multiple meta tags. These instructions are not case sensitive.

How to handle combined indexing and serving instructions

You can combine multiple robots meta tag directives, separated by commas, or use multiple meta tags to create a single command with multiple directives. Here's an example of a robots meta tag that tells web crawlers not to index the page or crawl any links on it:

Comma separated list

Multiple meta tags

The following example limits the text excerpt length to 20 characters and allows large image previews:

If you specify multiple crawlers, and each crawler has a different directive, the search engine will use all the negative directives together. For example:

When Googlebot crawls pages containing these meta tags, it treats them as having noindex, nofollow directives.

Using the data-nosnippet HTML attribute

You can specify which textual parts of an HTML page should not be used to generate a snippet. You can do this at the HTML element level using the data-nosnippet HTML attribute in the span, div, and section elements. data-nosnippet is treated as a Boolean attribute. As with all Boolean attributes, any value specified is ignored. To ensure machine-readable, the HTML section must be valid HTML and all tags must have corresponding closing tags.

Google typically renders a page in order to index it, but rendering is not guaranteed. Therefore, data-nosnippet may be extracted both before and after rendering. To avoid rendering uncertainty, do not add or remove the data-nosnippet attribute from existing nodes via JavaScript. When adding DOM elements via JavaScript, include the data-nosnippet attribute as needed when initially adding the element to the page's DOM. If custom elements are used and you need to use data-nosnippet, wrap or render them with div, span, or section elements.

Use structured data

The robots meta tag controls the amount of content that Google automatically extracts from a page and displays as search results. However, many publishers also use schema.org structured data to provide specific information for search presentation. The robots meta tag restrictions do not affect the use of that structured data, with the exception of article.description and description values of structured data specified for other creative works. To specify a maximum length for previews based on these description values, use the max-snippet robots meta tag. For example, recipe structured data on a page can be included in a recipe carousel even though the text preview is limited. You can use max-snippet to limit the length of text previews, but this robots meta tag is not used when using structured data to provide information for rich results.

To manage how structured data is used on your pages, edit the structured data types and values themselves, adding or removing information so that only the data you want is available. Also note that structured data can still be used to display search results when it is declared within a data-nosnippet element.

Actually adding the X-Robots-Tag

You can add X-Robots-Tags to your website's HTTP responses through the configuration files of your website's web server software. For example, on Apache-based web servers, you can use .htaccess and httpd.conf files. The benefit of using X-Robots-Tags in HTTP responses is that you can specify crawling instructions that apply to your entire website. Regular expressions are supported, which allows for a high degree of flexibility.

For example, to add a noindex, nofollow X-Robots-Tag to HTTP responses for all .PDF files across your entire site, add the following snippet to your root .htaccess or httpd.conf file for an Apache site, or to your .conf file for an NGINX site.

Apache

Header set X-Robots-Tag "noindex, nofollow"

NGINX

location ~* \.pdf$ { add_header X-Robots-Tag "noindex, nofollow"; }

For non-HTML files (such as image files) that cannot use the robots meta tag in HTML, you can use the X-Robots-Tag. The following example shows how to add a noindex X-Robots-Tag directive for image files (.png, .jpeg, .jpg, .gif) across your entire site:

Apache

Header set X-Robots-Tag "noindex"

NGINX

location ~* \.(png|jpe?g|gif)$ { add_header X-Robots-Tag "noindex"; }

You can also set the X-Robots-Tag header for individual static files:

Apache

# the htaccess file must be placed in the directory of the matched file. Header set X-Robots-Tag "noindex, nofollow"

NGINX

location = /secrets/unicorn.pdf { add_header X-Robots-Tag "noindex, nofollow"; }

Combining robots.txt directives with indexing and content serving directives

Robots meta tags and X-Robots-Tag HTTP headers are only visible to crawlers when a URL is crawled. If you block a page via a robots.txt file, crawlers will not find any information about indexing/serving directives and will ignore them. If indexing/serving directives must be followed, you cannot block crawlers from crawling URLs that contain these directives.

Original link:https://developers.google.com/search/docs/advanced/robots/robots_meta_tag?hl=zh-cn