Excellent software and practical tutorials

howStopping AI Scraping Bots? Whether you are a content creator or a blogger, you make a living by generating unique, high-quality content. Have you noticed that generative AI platforms like OpenAI or CCBot use your content to train their algorithms without your consent? Don’t worry! You can use Robots.txt file blocks these AI crawlers from accessing your website or blog.

What is a robots.txt file?

Robots.txt is nothing but a text file that instructs robots (such as search engine robots) on how to crawl and index the pages on their website. You can block/allow good robots or bad robots that follow your robots.txt file. The syntax to block a single robot using user-agent is as follows:

user-agent: {BOT-NAME-HERE} disallow: /

Here's how to allow specific robots to crawl your site using a user-agent:

User-agent: {BOT-NAME-HERE} Allow: /

Where to place the robots.txt file?

Upload the file to your website's root folder. So the URL will look like this:

https://example.com/robots.txt https://blog.example.com/robots.txt

For more information, see the following resources about robots.txt:

- From GoogleIntroduction to robots.txt.

- What is robots.txt? | How to create a robots.txt fileIn CloudflareWork.

How to block AI crawlers using robots.txt file

The syntax is the same:

user-agent: {AI-Ccrawlers-Bot-Name-Here} disallow: /

Block OpenAI using a robots.txt file

Add the following four lines to robots.txt:

User-agent: GPTBot Disallow: / User-agent: ChatGPT-User Disallow: /

Note that OpenAI has two separate user agents for web crawling and browsing, each with its own CIDR and IP range. To configure the firewall rules listed below, you need to have a solid understanding of networking concepts and Linux root-level access. If you lack these skills, consider using the services of a Linux system administrator to prevent access from constantly changing IP address ranges. This can turn into a game of cat and mouse.

1:ChatGPT-UserBy ChatGPTPluginsuse

Here is a list of user agents used by OpenAI crawlers and fetchers:, including CIDR or IP address ranges, for blocking plugin AI bots that you can use with your web server firewall. You can use the ufw command or iptables command on your web server to block23.98.142.176/28 .For example, here is a firewall rule to block a CIDR or IP range using UFW:

sudo ufw deny proto tcp from 23.98.142.176/28 to any port 80 sudo ufw deny proto tcp from 23.98.142.176/28 to any port 443

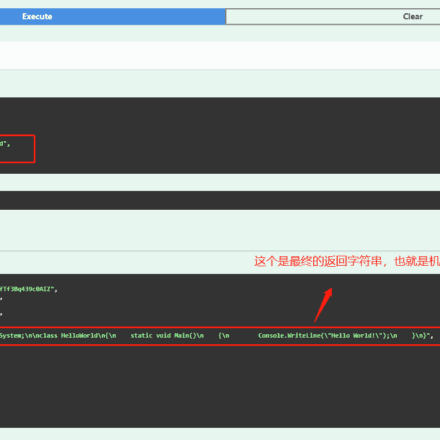

2:GPTBotUsed by ChatGPT

The following are the user agents used by OpenAI crawlers and fetchers:List, includingCIDROr IP address ranges to block AI bots that you can use with your web server firewall. Again, you can block these ranges using the ufw command or the iptables command. Here is a shell script to block these CIDR ranges:

#!/bin/bash # Purpose: Block OpenAI ChatGPT bot CIDR # Tested on: Debian and Ubuntu Linux # Author: Vivek Gite {https://www.cyberciti.biz} under GPL v2.x+ # ------------------------------------------------------------------ file="/tmp/out.txt.$$" wget -q -O "$file" https://openai.com/gptbot-ranges.txt 2>/dev/null while IFS= read -r cidr do sudo ufw deny proto tcp from $cidr to any port 80 sudo ufw deny proto tcp from $cidr to any port 443 done < "$file" [ -f "$file" ] && rm -f "$file"

Block Google AI (Bard and Vertex AI generation APIs)

Add the following two lines to your robots.txt:

User-agent: Google-Extended Disallow: /

For more information, see the following list of user agents used by Google crawlers and extractors. However, Google does not provide CIDRs, IP address ranges, or Autonomous System Information (ASN) to block AI bots that you can use with your web server firewall.

Blocking commoncrawl (CCBot) using robots.txt file

Add the following two lines to your robots.txt:

User-agent: CCBot Disallow: /

Although Common Crawl is aNon-profit foundation, but each uses data to train their AI through their bot called CCbot. It is also important to block them. However, just like Google, they do not provide CIDR, IP address ranges or Autonomous System Information (ASN) to block AI bots that you can use with your web server firewall.

Block Perplexity AI using a robots.txt file

Another service that takes all of your content and rewrites it using generative artificial intelligence. You can block it as follows:

User-agent: PerplexityBot Disallow: /

They also publishedIP address range, you can block it using a WAF or web server firewall.

Can AI bots ignore my robots.txt file?

Well-known companies like Google and OpenAI usually comply with robots.txt protocols. But some poorly designed AI bots will ignore your robots.txt.

Can AWS or Cloudflare WAF technology be used to block AI bots?

Cloudflare recently announced, they introduced a new firewall rule that blocks AI bots. However, search engines and other bots can still use your website/blog through their WAF rules. It is important to remember that WAF products need to have a thorough understanding of how bots operate and must be implemented with care. Otherwise, other users may also be blocked. Here are some tips for using Cloudflare How WAF blocks AI bots:

Note that I am evaluating the Cloudflare solution, but my initial testing shows that it blocks at least 3.31% of users. 3.31% is the CSR (Challenge Resolution Rate) rate, i.e. people who solve the CAPTCHA provided by Cloudflare. This is a high CSR rate. I need to do more testing. I will update this blog post when I start using Cloudflare.

Can I block access to code and documents hosted on GitHub and other cloud hosting sites?

No, I don't know if that's possible.

I have concerns about using GitHub, which is a product of Microsoft and the largest investor in OpenAI. They may use your data to train AI through terms of service updates and other loopholes. It is best if your company or you host the git server independently to prevent your data and code from being used for training. Large companies such as Apple prohibit the internal use of ChatGPT and similar products because they are worried that it may lead to code and sensitive data leaks.

When AI is used to benefit humanity, is it ethical to prevent AI robots from obtaining training data?

I am skeptical about using OpenAI, Google Bard, Microsoft Bing, or any other AI for the benefit of humanity. It seems like just a scheme to make money while generative AI replaces white collar jobs. However, if you have any information on how my data could be used to cure cancer (or something similar), feel free to share it in the comments section.

My personal opinion is that I do not benefit from OpenAI/Google/Bing AI or any AI right now. I have worked hard for over 20 years and I need to protect my work from direct profiteering by these big tech companies. You don't have to agree with me. You can hand over your code and other stuff to the AI. Remember, it is optional. The only reason they offer robots.txt control now is because multiple book authors and companies are suing them in court. In addition to these issues, AI tools have been used to create spam websites and e-books. See below for selected reading:

- Sarah Silverman sues OpenAI and Meta

- AI image creators face legal challenges in UK and US

- Stack Overflow is a casualty of ChatGPT: Traffic dropped by 14% in March

- Author uses ChatGPT to spam Kindle books

- ChatGPT banned in Italy over privacy concerns

- AI spam is already spreading across the internet, and there are clear signs of it

- Experts warn AI could lead to extinction

- Artificial intelligence is used to generate entire spam websites

- The company plans to abandon human employees and adopt ChatGPT-style artificial intelligence

- The Secret List of Websites That Make ChatGPT and Other AIs Sound Smart

It’s true that AI already uses much of your data, but anything you create in the future can be protected by these technologies.

Summing up

As generative AI becomes more popular, content creators are starting to question AI companies for using data without permission to train their models. They profit from the code, text, images, and videos created by millions of small independent creators while depriving them of their income streams. Some people may not object, but I know that such a sudden move would devastate many people. Therefore, website operators and content creators should be able to easily block unwanted AI crawlers. The process should be simple.

I will update this page as more bots can be blocked via robots.txt and using cloud solutions provided by third parties such as Cloudflare.

Other open source projects to block bots

- Nginx Bad Bot and User Agent Blocker

- Fail2BanScan log files such as /var/log/auth.log and ban IP addresses that make too many failed login attempts.