Excellent software and practical tutorials

Step-by-step tutorial on installing io.net on Windows and connecting new devices

io.net Cloud is a state-of-the-art decentralized computing network that allows machine learning engineers to access distributed cloud clusters at a fraction of the cost of comparable centralized services.

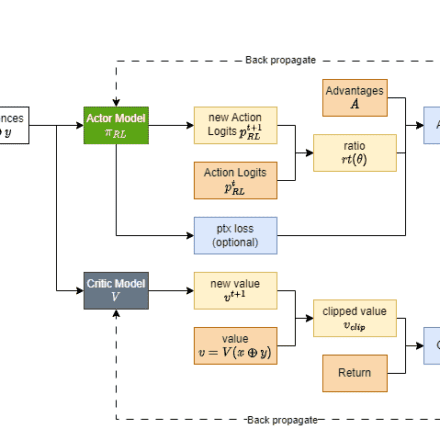

Modern machine learning models often take advantage of parallel and distributed computing. It is critical to leverage the power of multiple cores across multiple systems to optimize performance or scale to larger datasets and models. The training and inference processes are not just simple tasks running on a single device, but often involve a coordinated network of GPUs working together.

Unfortunately, with the need for more GPUs in public clouds, there are some challenges to obtaining distributed computing resources. Some of the most prominent ones are:

- Limited Availability:Accessing hardware using cloud services like AWS, GCP, or Azure often takes weeks, and popular GPU models are often not available.

- Bad Choices: Users have little choice in GPU hardware, location, security level, latency, and other options.

- High cost:Getting good GPUs is expensive, and projects can easily spend hundreds of thousands of dollars per month on training and inference.

io.net solves this problem by aggregating GPUs from underutilized sources such as independent data centers, crypto miners, and crypto projects like Filecoin and Render. These resources are combined in the Decentralized Physical Infrastructure Network (DePIN), giving engineers access to massive amounts of computing power in a system that is accessible, customizable, cost-effective, and easy to implement.

io.net documentation:https://developers.io.net/docs/overview

Tutorial on installing io.net and connecting a new device

First go tocloud.io.net Log in to io.net using your Google email or X account

1. Navigate to WORKER from the drop-down menu

2. Connect a new device

Click "Connect a new device"

3. Choose a supplier

Select the vendor you wish to group your hardware into

4. Name your device

Add a unique name for your device, ideally in a format similar to the following: My-Test-Device

5. Select the operating system "OS"

Click the Windows field

6. Device Type

If you select GPU Worker and your device does not have a GPU, the setup will fail

7. Docker and Nvidia driver installation

Follow the steps in our Docker, Cuda, and Nvidia driver installation documentation

8. Run Docker commands

Run this command in terminal and make sure docker desktop is running in the background

9. Wait for connection

Keep clicking Refresh while you wait for new devices to connect.

Docker Installation on Windows

First, you need to enable virtualized BIOS.

To check if it is enabled, go to Task Manager Performance so you will see it here:

If it is not enabled, follow these steps:

- To enable virtualization technology in BIOS or UEFI setup, you need to access your computer's BIOS or UEFI configuration menu during the boot process. The specific steps may vary depending on the manufacturer and model of your computer, but here are the general steps to enable virtualization.

- Install WSL 2 by opening PowerShell as an administrator. To do this, search for "PowerShell" in the Start menu, right-click "Windows PowerShell", and select "Run as Administrator".

- Run the following command to enable WSL functionality in Windows 10/11:

dism.exe /online /enable-feature /featurename:Microsoft-Windows-Subsystem-Linux /all /norestart

- Then, enable the Virtual Machine Platform feature in the same PowerShell window by running the following command:

dism.exe /online /enable-feature /featurename:VirtualMachinePlatform /all /norestart

- Then set WSL 2 as the default version (sometimes you may need to restart your computer):

wsl --set-default-version 2

Download Docker:

Visit the Docker website:https://www.docker.com/products/docker-desktop/ and click "download for Windows":

Run the installation process and reboot the machine after it is finished:

Start the docker desktop and select wsl2 for integration in docker:

Verify the installation by opening CMD and typing

docker --version

You will then receive the following output

Docker version 24.0.6, build ed223bc

That's it. You have docker installed and ready.

Nvidia driver installation on Windows

- To check if you have the correct driver, open a command line on your Windows PC (Windows key + R, type cmd) and enter the following: nvidia-smi . If you encounter the following error message:

C:\Users>nvidia-smi 'nvidia-smi' is not recognized as an internal or external command, operable program or batch file.

This means that you do not have the NVIDIA drivers installed. To install them, follow these steps:

- Visit the Nvidia website:https://www.nvidia.com/download/index.aspxand enter the name of your GPU and click Search:

- Click the Download button for the appropriate NVIDIA driver for your GPU and version of Windows.

- Once the download is complete, start the installation, select the first option, and click "Agree and Continue".

- After the installation is complete, you must restart your computer. Restarting your computer ensures that the new NVIDIA driver is fully integrated into your system.

- After your computer restarts, open a command prompt (Windows key + R, type cmd) and type the following command:

nvidia-smi

- You should see a result like this:

That's it. You have the correct NVIDIA drivers installed and ready to go.

Download CUDA Toolkit (optional)

- Visit the NVIDIA CUDA Toolkit download page:https://developer.nvidia.com/cuda-downloads

- Select your operating system (e.g. Windows).

- Select your architecture (usually x86_64 for 64-bit Windows).

- Download the exe local installer. After downloading the file, run the installer:

- And follow the installation process.

- Then, verify the installation process. Open a command prompt (Windows key + R, type cmd) and type the following command:

nvcc --version

- You should get the following answer:

nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2022 NVIDIA Corporation Built on Wed_Sep_21_10:41:10_Pacific_Daylight_Time_2022 Cuda compilation tools, release 11.8, V11.8.89 Build cuda_11.8.r11.8/compiler.31833905_0

That's it. You have the CUDA Toolkit installed and ready to go.

Note that we are now installing a 20GB container that contains all the packages needed for our ML application. Everything happens inside the container and nothing is transferred from the container to the file system.

Troubleshooting Guide

Troubleshooting guide for GPU-enabled Docker platforms

Verify Docker setup on Linux and Windows

- To verify that your setup is working properly, execute:

docker run --gpus all nvidia/cuda:11.0.3-base-ubuntu18.04 nvidia-smi

- The output should be similar to nvidia-smi.

- This command checks whether Docker is utilizing your GPU correctly.

Stop Platform

Windows (using PowerShell):

docker ps -a -q | ForEach { docker rm $\_ }Spotty uptime on Windows?

To ensure that the DHCP lease time on the router is set to more than 24 hours, access the Group Policy Editor in the Windows operating system. Enable the specified settings in the following order:

- Navigate to Computer Configuration in the Group Policy Editor.

- In Computer Configuration, locate the Administrative Templates section.

- In the Administrative Templates section, navigate to System.

- From the System menu, select Power Management.

- Finally, access the Sleep Settings subsection in Power Management.

- In the "Sleep settings" submenu, activate the "Allow network connections during connected standby (on battery)" and "Allow network connections during connected standby (plugged in)" options.

- Make sure to adjust these configurations accordingly to achieve the desired results.

Which ports need to be exposed on the firewall for the platform to function properly: (Linux and Windows)

TCP: 443 25061 5432 80

UDP: 80 443 41641 3478

How can I verify that the program started successfully?

When you run the following commands on powershell(windows) or terminal(linux), you should always have 2 docker containers running:

docker ps

If there is no container or only one container running after executing the docker run -d ... command on the website:

Stop the platform (see command guide above) and restart the platform again using the website command

If this still doesn't work - please contact our Discord community for help:https://discord.com/invite/kqFzFK7fg2

io.net supported devices

| Manufacturer | GPU Model |

|---|---|

| NVIDIA | A10 |

| NVIDIA | A100 80G PCIe NVLink |

| NVIDIA | A100 80GB PCIe |

| NVIDIA | A100-PCIE-40GB |

| NVIDIA | A100-SXM4-40GB |

| NVIDIA | A40 |

| NVIDIA | A40 PCIe |

| NVIDIA | A40-8Q |

| NVIDIA | GeForce RTX 3050 Laptop |

| NVIDIA | GeForce RTX 3050 Ti Laptop |

| NVIDIA | GeForce RTX 3060 |

| NVIDIA | GeForce RTX 3060 Laptop |

| NVIDIA | GeForce RTX 3060 Ti |

| NVIDIA | GeForce RTX 3070 |

| NVIDIA | GeForce RTX 3070 Laptop |

| NVIDIA | GeForce RTX 3070 Ti |

| NVIDIA | GeForce RTX 3070 Ti Laptop |

| NVIDIA | GeForce RTX 3080 |

| NVIDIA | GeForce RTX 3080 Laptop |

| NVIDIA | GeForce RTX 3080 Ti |

| NVIDIA | GeForce RTX 3080 Ti Laptop |

| NVIDIA | GeForce RTX 3090 |

| NVIDIA | GeForce RTX 3090 Ti |

| NVIDIA | GeForce RTX 4060 |

| NVIDIA | GeForce RTX 4060 Laptop |

| NVIDIA | GeForce RTX 4060 Ti |

| NVIDIA | GeForce RTX 4070 |

| NVIDIA | GeForce RTX 4070 Laptop |

| NVIDIA | GeForce RTX 4070 SUPER |

| NVIDIA | GeForce RTX 4070 Ti |

| NVIDIA | GeForce RTX 4070 Ti SUPER |

| NVIDIA | GeForce RTX 4080 |

| NVIDIA | GeForce RTX 4080 SUPER |

| NVIDIA | GeForce RTX 4090 |

| NVIDIA | GeForce RTX 4090 Laptop |

| NVIDIA | H100 80G PCIe |

| NVIDIA | H100 PCIe |

| NVIDIA | L40 |

| NVIDIA | Quadro RTX 4000 |

| NVIDIA | RTX 4000 SFF Ada Generation |

| NVIDIA | RTX 5000 |

| NVIDIA | RTX 5000 Ada Generation |

| NVIDIA | RTX 6000 Ada Generation |

| NVIDIA | RTX 8000 |

| NVIDIA | RTX A4000 |

| NVIDIA | RTX A5000 |

| NVIDIA | RTX A6000 |

| NVIDIA | Tesla P100 PCIe |

| NVIDIA | Tesla T4 |

| NVIDIA | Tesla V100-SXM2-16GB |

| NVIDIA | Tesla V100-SXM2-32GB |

CPU

| Manufacturer | CPU Model |

|---|---|

| Apple | M1 Pro |

| Apple | M1 |

| Apple | M1 Max |

| Apple | M2 |

| Apple | M2 Max |

| Apple | M2 Pro |

| Apple | M3 |

| Apple | M3 Max |

| Apple | M3 Pro |

| AMD | QEMU Virtual CPU version 2.5+ |